アプリ関連ニュース

- 2023年9月28日

- AI

ChatGPTに音声による会話、画像の認識の機能が追加されます!

ChatGPTに音声による会話、画像の認識の機能が追加されると、OpenAI公式ブログで発表がありました。

https://openai.com/blog/chatgpt-can-now-see-hear-and-speak

nishida at 2023年09月28日 10:00:00

- 2023年9月27日

- Mac

新しいMacOS Sonomaが配信開始されました

スクリーンセーバーが新しくなり

デスクトップにウィジェットを配置できるようになったそうです。

OS標準のメモアプリでPDFを見ることができる機能が追加されたり、

FaceTimeやZoom等で画面共有で共有するコンテンツに、

カメラで撮影している自分自身をオーバーレイ表示することが可能になったようです。

既にサードパーティのサービスを利用すれば実現できていた機能もありますが

OS標準機能で利用可能になったのは便利ですね。

追加された機能の中で、カメラに向かってハンドジェスチャーをするだけで、

エフェクトを表示することができる機能が面白そうだなと思いました。

水曜担当:Tanaka

tanaka at 2023年09月27日 10:00:00

Microsoft has recently unveiled its AI companion

Copilot will start rolling out on Windows 11 starting September 26 through a free Windows 11 update.

Microsoft Copilot, the AI companion from Microsoft, is designed to seamlessly integrate with all Microsoft applications and experiences, encompassing Microsoft 365, Windows 11, Edge, and Bing. This means that users will have the ability to harness AI support across a wide spectrum of tasks within their workflows across these diverse Microsoft applications.

The pricing for Microsoft Copilot is contingent upon the specific application it is integrated with. Beginning November 1, enterprise customers can access Microsoft 365 Copilot for a substantial cost of $30 per user per month.

However, for the other applications, Microsoft has announced that Copilot will be introduced in its initial version as part of a complimentary update to Windows 11, commencing on September 26. It will also be progressively integrated across Bing, Edge, and Microsoft 365 Copilot throughout the fall.

Bing is also set to incorporate support for OpenAI’s latest DALL.E 3 model, enhancing its ability to provide personalized search results that take into account your search history. Additionally, Bing is introducing an AI-powered shopping feature for a more tailored shopping experience. Moreover, Bing Chat Enterprise is receiving updates aimed at enhancing its mobile usability and visual appeal.

Asahi

waithaw at 2023年09月26日 10:00:00

- 2023年9月25日

- AI

Generative AI for youtube creators

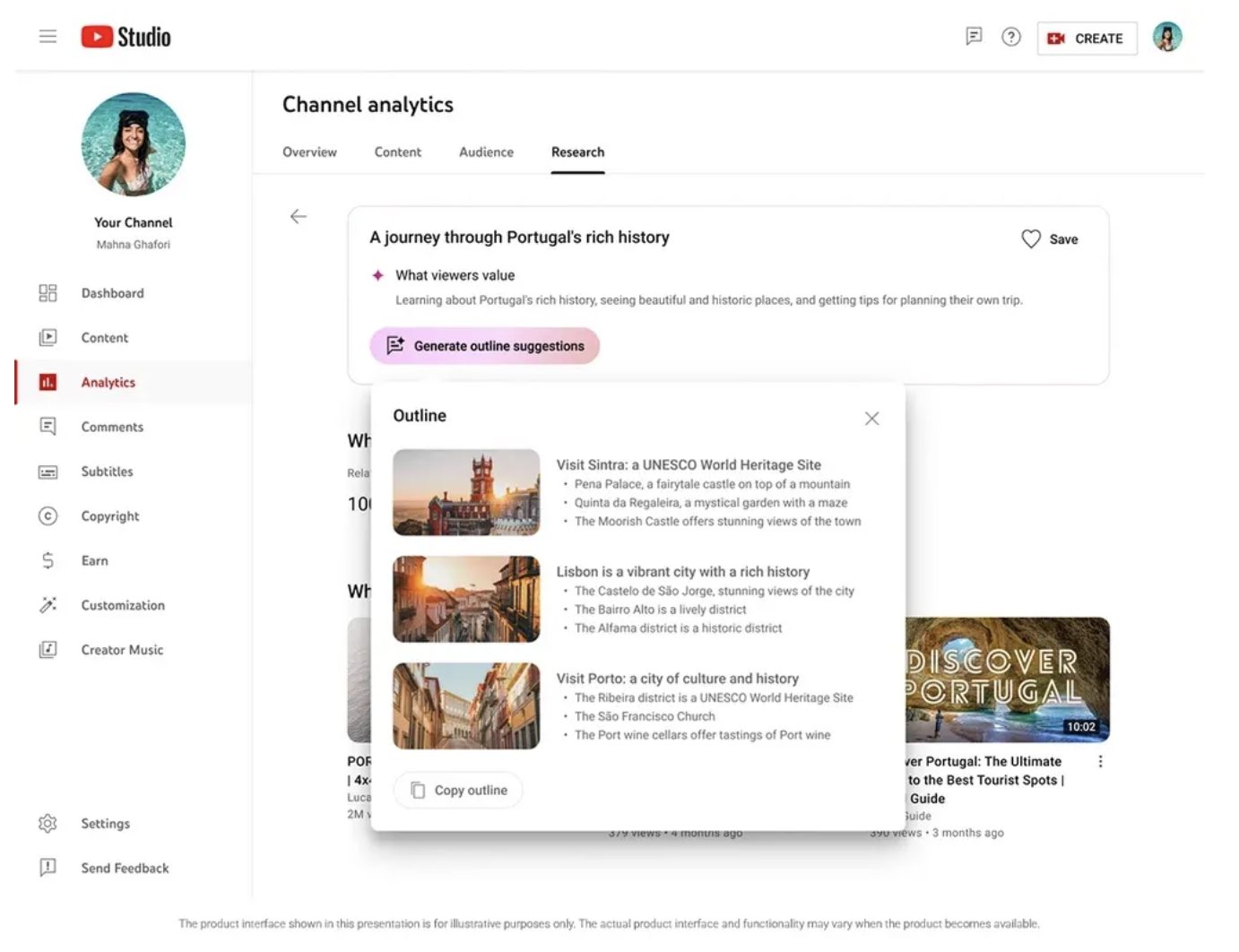

At a YouTube event on Sept 21, CEO Neal Mohan revealed that the platform is testing new AI-powered generation tools to suggest video topics in YouTube Studio and ease the brainstorming process for content creators.

Mohan explained that the inspiration tool, called “AI Insights for Creators,” generates data-driven ideas about what viewers are already seeing.

They used a travel content creator, as an example, saying the viewers are most interested in getting trip ideas. The AI tool suggests a video idea that highlight for the subscribers.

This feature is currently being tested with some content creators. According to YouTube, in the first test, more than 70% of respondents said that “AI Insights helped them come up with ideas for videos.”

It will be released on YouTube Studio next year.

Yuuma

yuuma at 2023年09月25日 10:00:00

- 2023年9月21日

- AI

OpenAI GPT API(13) WebAppでの活用

nishida at 2023年09月21日 10:00:00